Over the past few years, we have experimented with many methodologies in an ongoing search for a better way to help our clients define the User Experience of their digital products. We have learnt to better understand consumer needs using User Research techniques such as the Jobs-to-be-done. We tested new product concepts by getting the reactions of potential consumers through the Google Ventures' Design Sprint.

We have implemented processes of continuous experimentation to improve the performance of the key metrics of success.

We have had the opportunity to use these methodologies to help large companies, such as Lastminute.com or the Ethical Bank Group, and young, fast-growing start-ups, such as the fintech Euclidea.

Creating prototypes helps to quickly explore new product concepts or new functionalities. But how is it possible to evaluate their effectiveness? At Moze, we do this through interviews: we meet potential users of the product we are working on in person, submit the prototypes we have created to them and try to obtain feedback that can help us improve them.

In this article we describe two techniques that we routinely use during interviews with prototypes to answer these questions.

- Do the people interviewed understand the value we want to express?

- Are the interviewees interested in the solution?

1. Do the people interviewed understand the value we want to express?

To try to answer these questions, we were inspired by the Golden Circle idea developed by Simon Sinek.

"People don't buy what you do; they buy why you do it. And what you do simply proves what you believe'

— Simon Sinek

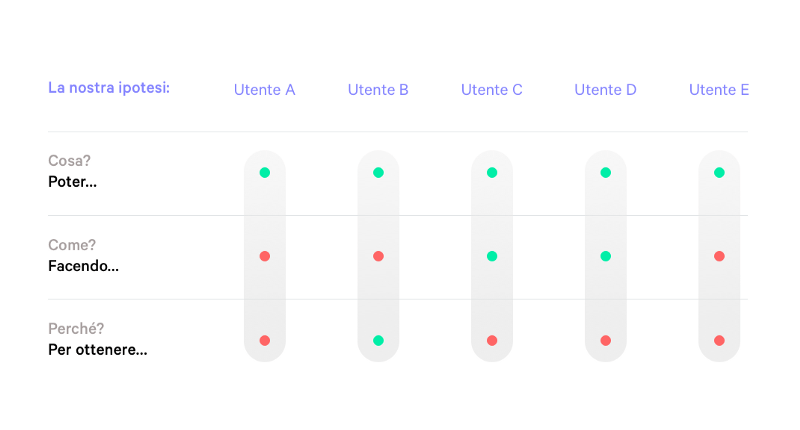

- For each product we define the hypothesis of value according to these three criteria.

- What: what can it be used for?

- How: how does it work and how can it be used?

- Why: what is the end result, the added value for the user?

- We show the prototype to several people, the interviewer tries to collect the value perception (‘What does this solution enable you to do?’, ‘How does it work?’, ‘What superpower would this solution give you?’).

- After the interviews, the project team compares the initial hypotheses with the real perception of the people interviewed.

2. Are the people interviewed interested in the solution?

The first step is to understand whether a prototype can best express the value of the solution it represents. The next step is usually to assess a person's concrete interest in the solution.

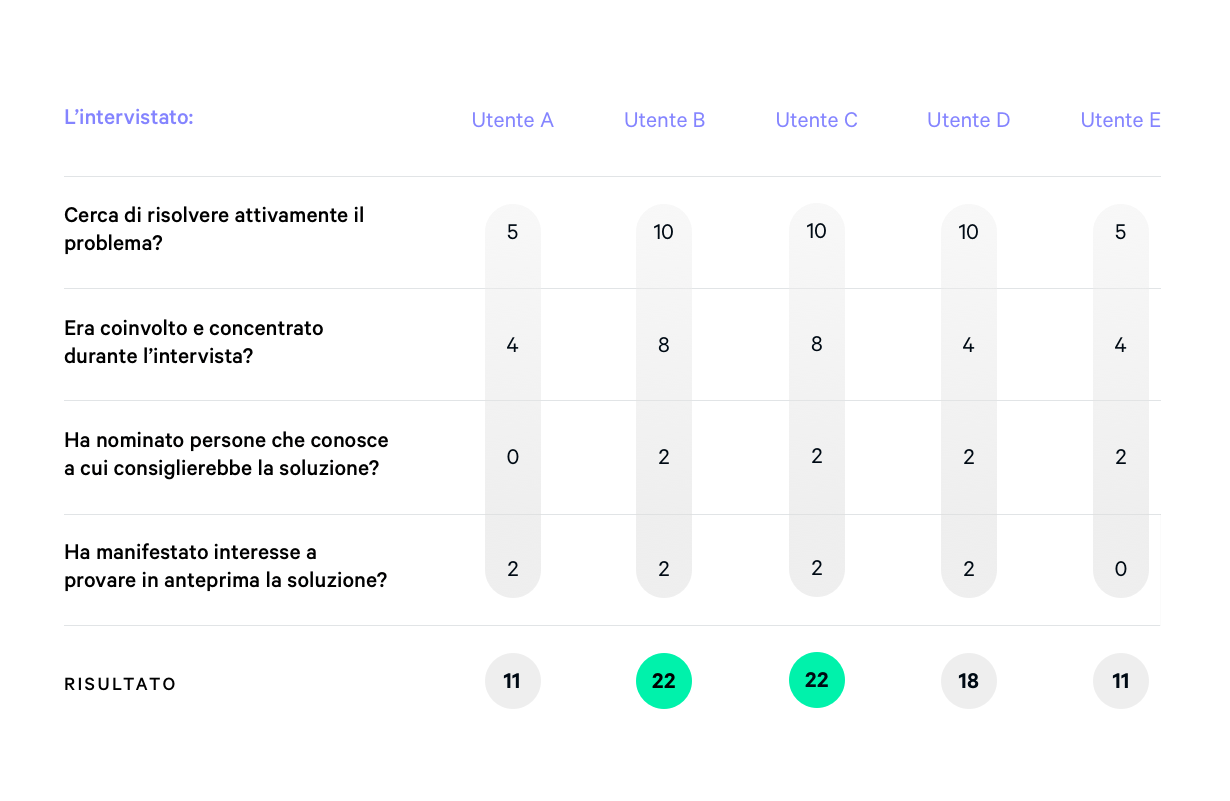

We are inspired by Alistair Croll's framework, described in the book “Lean Analytics”, to assess interest accurately: following each interview, we try to answer four basic questions. The answers are expressed in the form of a score from 0 to 10.

20 points is the threshold of sufficiency, i.e. it indicates that the person has expressed a concrete interest in the solution

After the interview is over, we also usually invite the interviewee by e-mail to join a “beta” programme, with the promise to let him or her have a preview of all news about the project launch. In this way, we can assess the actual interest in “giving up something” (the e-mail address) in exchange for the possibility of staying informed about the initiative.

This scheme helps us judge how desirable the designed solution is by assigning a score to each person surveyed.

Continuously evolving

These are some of the tools we use to assess the perception of the value proposition of a product concept in face-to-face interviews.

We are always looking for ways to improve our tools. What we think is important, when evaluating the impact of User Experience Design choices, is to always strive for measurable and readable results.